Chih-Kuan Yeh

Research Scientist at Google Brain

Biography

I am a research scientist in Google Brain. During my PhD in CMU, my research interests focus on understanding and interpreting machine learning models by more objective explanations (which may be functional evaluations or theoretical properties). More recently, I am interested in building better large-scale models with less (but more efficient) data, and improving models through our understanding obtained by model explanations. Feel free to contact me if you are interested in working with me.

- Artificial Intelligence

- Machine Learning

- Explainable AI

- Algorithmic Game Theory

-

PhD Student in Machine Learning, 2017- 2022 (expected)

Carnegie Mellon University

-

BSc in Electrical Engineering, 2016

National Taiwan University

Experience

Featured Publications

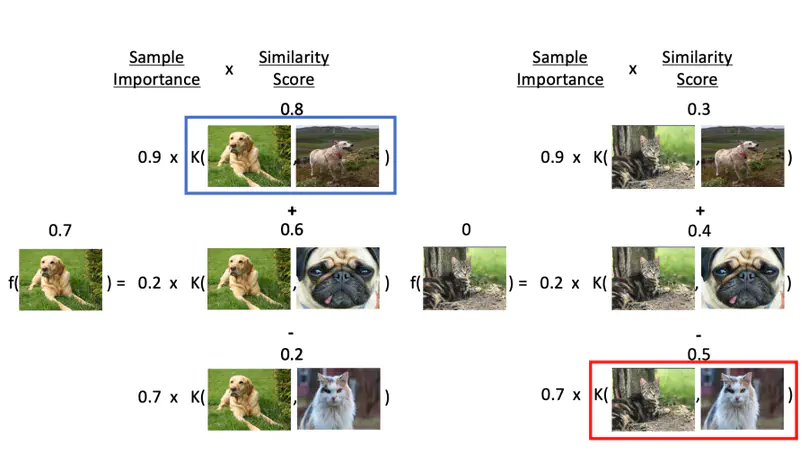

A new value function that addresses issues of on-manifold and off-manifold value functions to be used with Shapley value.